Projects Some of the things I do/make

H3KNIX

Project/Experiment in linux distro dev

I wanted to gain a deep understanding of the Linux way; building a distribution from scratch is the long road but definitely an educational one.

Why

As an educational project, back during summer break from school I worked on building a linux distribution from scratch. I gathered information from around the web to learn all about the fundementals and inner functions of how a linux distribution works. LFS ( linuxfromscratch.org ) was a great resource. From the file structure through different software packages that make up the base of the majority of linux distributions.

Building the kernel and bootstrapping the base system, I learned all about how the linux kernel compartmentalizes fundemental drivers as kernel modules and how to configure/compile the kernel by hand. I also learned a lot about the suite of tools that make up a distro. Configuring automatic loading of kernel modules based on hardware id's, back in the day I used a tool called kudzu to load kernel modules based on detected hardware.

Package Management

With any distro, once you have the base built, you begin to consider how people are going to install different software to the system. You bundle common software that your user base will want within the install of your distro, but what about other software that some will need and others will not? What about dependencies for that software? Some software will require common dependencies and your dependencies can have dependencies. You can end up with a situation, commonly referred to as Dependency Hell

I wanted to learn about package management! So I looked into how the most popular package managers operate, fundementally they deliver an archive of files that will be extracted to certain locations within the system. Packages will sometimes have scripts that come with them to handle additional logic/situations that cannot be handled with simple file extraction alone ( such as detecting the presence of specific configuration files, or adding lines to a central configuration ).

I then proceeded to build my own package manager. The package management system was named "capsules" ( therefore the ".cap" extension ). I built a variety of sandbox systems where I could construct the packages in a "chrooted"/isolated environment. I used a variety of tools to track file additions from a `make install` operation. I compiled sets of packages and tracked their files, creating archives ( in the form of tar/bz2 ending in ".cap" extension ). Each capsule contained a full structure of the system starting at root ( "/" ). The files that were to be installed in the system were located in their corresponding folders so that a binary/executable was located ( relatively ) inside the capsule under usr/bin or usr/local/bin when appropriate. The structure also included a path to the package management database file for that capsule. Each capsule had a db flatfile matching it's package name that contains version information and the path list to all the files the capsule installed.

In addition to the capsule format, there was a central repository that hosted the package for the i686 architecture. The central repository had a database of the packages and their dependencies usign a relational database ( mysql ). The repository was accessed via an API created in php. The API returned a delimited text response based on the various calls ( such as dependency check, package search, package details, etc ).

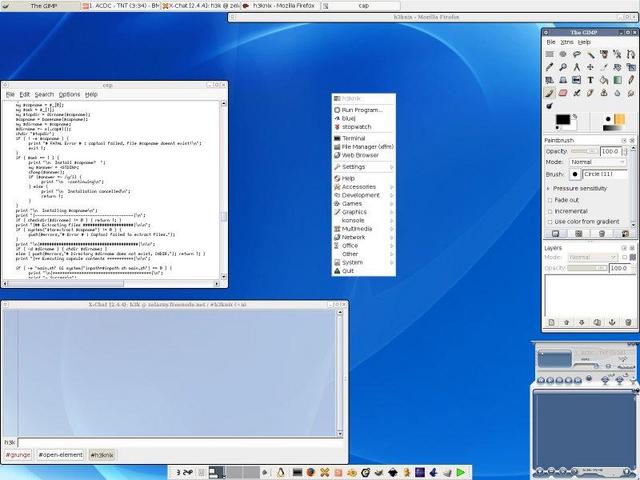

As the distro was developed as a learning experience, I tried out a variety of programming languages for the package management system. I started out building a good chunk of it in bash scripting, switching later to perl. I tried out ruby before programming the system in Python. At that point I settled on Python for the system. Python had many extensions ( modules ) that allowed quick access to a lot of functionality without reinventing the wheel. As needed I kept bash scripts around to support some of the processes.

I digress, I could go on and on about the package manager and how it operated.

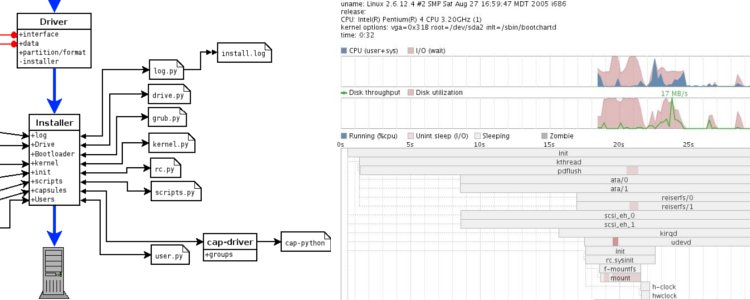

Installer

Initially, as I was beta testing and developing, I just kept a disk image. Now the immediate need was for an installation program along with an iso ( which eventually became a live cd ) so others could download the distro and install it on their machines. I built the installation program in python. There were many features in development, however the version that shipped with the iso used a text based install ( keyboard menus using the ncurses python library ). The user would select their desired partitioning/disk formats, select a root partition and configure swap partition. The user would choose their filesystem format desired, select their timezone, choose optional packages to have installed. They would then choose their network settings ( static or dynamic ip, nameservers, etc ). The user would then set their desired root password, then the installer went away to work formatting/partitioning disks, installing base and optional packages, generating config files ( such as fstab, passwd/group, etc ), and ultimately setting up the bootloader.

Development versions of the installer included a graphical interface for installing the distro ( keyboard and mouse ). I considered using anaconda ( made popular in redhat distros ) but ultimately settled to build my own. I built up automatic detection of graphics cards and resolutions to generate a Xorg conf needed to start a graphical environment and launch the python installer program. The python installer used an object oriented model for the interface so that there was an object interface that defined the required methods for the gui to support. In such the gui could be swapped out back to the text based ncurses installer. There was also an experimental class built for the installation that allowed the user to install the distro from a browser on the separate machine. The class utilized different python modules to form a basic http server and allow the user to select installation options via a https link ( using the dynamic ip of the system ).

Project traction and end of life

As h3knix developed, it attracted some attention based on it's ability to install quickly and run effeciently on i686 platforms. I targeted performance builds with settings to take advantage of the architecture optimizations within the kernel and software compilation configurations. The distro valued a minimal approach allowing it to run super fast on powerful machines and maintain decent performance on older machines. It was a great experiment and learning project to work on.

It garnered some attention within the linux community and a few contributors signed on to provide infrastructure, help build packages, and write technical documentation for new users or users with less experience with linux. We were also able to utilize some infrastructure from sourceforge and other open source advocates. Getting hosting space for the isos was tough, not many providers wanted to front the bandwidth and disk space needed to store the isos and package repository.

With many open source projects, when the creator no longer actively contributes the project can die. h3Knix fizzled out when I no longer had time to maintain the system, build new packages, develop new features, support processes or cover infrastructure costs.